Google Duplex Review - How far does it go?

Yesterday was the Day 1 Keynote - the main Keynote of Google I/O 2018. They always go hard with software there and this year was no exception. We got all kinds of great announcements new Android P features especially the interesting new gesture navigation which would be interesting to play around with pretty shortly. New Google News stuff, new Google Maps features, Android dashboard all this great stuff.

See Also: Google made 15 mind-blowing announcements at its biggest conference of the year

In the middle of all of that, we got one of the most interesting new announcements went by. It was kind of early if you tuned in late you might have missed it maybe you were just tuning in to find out what Android P would be called - still don't know.

But they sure to behavior and they're called Google duplex. It's a behavior inside of Google assistant that they're still working on. It's a long way from being finished or from us ever using it.

You know how they're always adding new features to Google Assistant to make your life easier; you know new reminders, new routines, new predictive things, new things it does to help you out

Google Duplex will make it so that you never have to call a service business ever again.

See Also: LG G7 ThinQ - First Impressions and Review Ft. MKBHD

Let's say I want to get a haircut next Monday at around noon and this is at a place that doesn't do online reservations so you have to call in to make your reservation and you're not really into that. So what you want to do is ask Google assistant 'hey assistant make an appointment for my haircut next Monday around noon' and it will actually call that hair salon pretend to be a human talk to the person that picks up and get you a reservation for that hair appointment at around noon (watch real time example in video below).

Did Google assistant just pass the Turing test? Did that just? I think it literally just did. That was a real conversation with a real person at a real salon.

That is both amazing and kind of terrifying.

It also makes Siri look really bad. It had all the right reflections and pauses and just the intonation the way it talks was very convincing. Like I don't know if I was on the other end of that phone and I got that phone call I don't know that I would think that was a robot - if I wasn't told ahead of time I pretty sure I would have been convinced that's a human.

So there's that on stage and there's also a bunch more examples in a blog post that Google's posted where there are a bunch more scenarios of things that they've gotten it to successfully navigate through in this human conversation.

That just brings me back to how I feel about it a whole day later and really from what I've seen there are two main sides to this:

Number one is the incredible technical achievement of Google with the machine learning and the artificial intelligence and the natural language processing and all of that this is the culmination of all of their years of listening to our voices to make duplex this good.

It really is something special in 2018.

See Also: Amazing Things You Didn't Know Google Photos Can Do

Look we've all gotten those robocalls that are obviously fake right they're like 'hi I'm I'm Karen want to go on a cruise' like you asked it one question and it immediately falls apart like.

This seems like it'll legitimately make people's lives easier but then the whole other side of it and it's actually bigger than I initially was thinking is should we be worried about this?

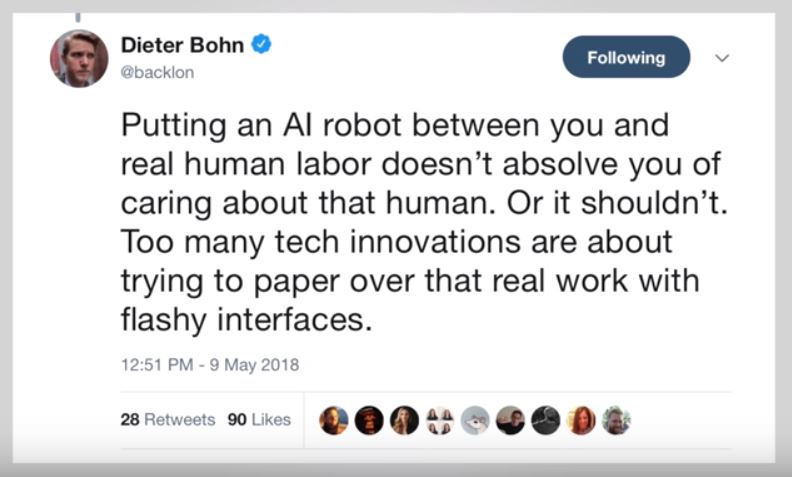

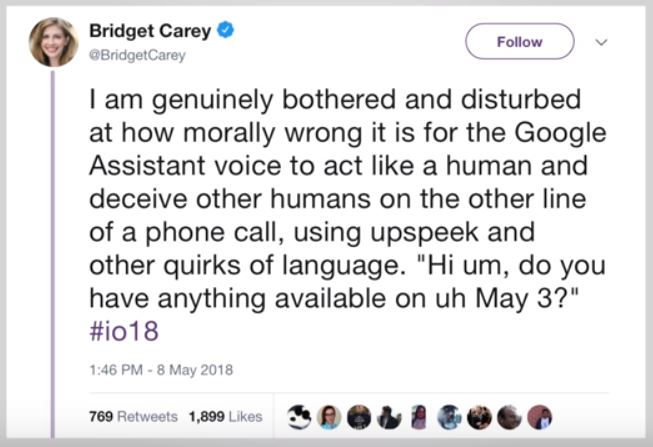

So I've seen a lot of reactions to this lately on Twitter especially and in some videos of people saying this is a terrible idea like this is why would Google do this tricking somebody into thinking they're talking to a human one they're not.

That is deeply concerning and I understand a lot of it even though I'm mostly on the side of this particular feature being particularly helpful. But like if I worked in service I think I would still want to know if when I'm not speaking to a human.

You know I mean like I don't have any logical reason why it doesn't even really matter on my end but it just the question is does Google have an obligation to let that person know that they're not speaking to a human?

I think a lot of people say yes you got to tell him you got to immediately let them know that it's a robot. But on the other hand, if you're coming from Google's point of view you have to mimic a human as closely as possible to make it work.

If you want to actually get the person on the call to pick up and respond to you and book that appointment they're listening to humans all day they're talking to people on the phone if they get a call that just says 'hi I'm a Google assistant' like they're probably gonna hang up they're not want to answer that so you actually have to blend in and make it as seamless as possible for Google to do their job and the assistant to actually assist you.

So I know it's not - it's not full open-ended AI.

It's not that creepy like pseudo human robo status like it hasn't gotten quite there. Yet it has a very specific goal that it has to tunnel in on but I guess it's interesting that basically, you can see these goal posts getting wider.

Like back in the day we used to just say look I just want the assistant to tell me what the weather is tomorrow and just be like as human as possible so you'd say hey Google do I need a jacket tomorrow you get your answer now the goal post looks I want the assistant you just make a hair appointment for me on Monday I don't really have the time to call I'm gonna go get on the train to work just make that appointment for me and now it's gonna start doing that.

But then next - the goal post is gonna be what like I wanted to edit my YouTube videos for me; I wanted to run a company; I wanted to drive me to work how far does it go?

I guess that's really my question at the end of this how far does it go

I had this conversation briefly with Neil deGrasse Tyson actually in a video it's pretty sweet. I would love to have this conversation with Elon Musk he talks about this kind of stuff all the time. But for real it's like one of those AI versus Machine Learning Things where it kind of starts in a box and it's really convincing an excellent and useful in this box but you got to keep it in that box.

So my final thoughts on Google duplex are this: I don't know if people care that they're talking to a robot I think there's gonna be people on both sides of the spectrum. But I feel like it wouldn't be that bad if you know right off the bat you're talking to a robot.

Like when I want I trigger the assistant on my phone or on Siri or on Alexa or any of those and it responds like a human. I know it's a robot still like it can be as seamless and human-like as possible in the voice and the dictation but it's because I'm triggering it myself in my phone I know it's a robot.

But when you get a phone call and you answer the phone call that gets weird because you think you're answering and talking to a human and then you might like find out during that you're actually talking to a robot and then you were told and then it kinda feels weird then it feels creepy.

So that brings us to number two which is just how far does it go?

I always wonder what are we willing to do with robots that's a weird question but really how far are we gonna go with these robot assistants in our lives? Google duplex really trigger in the intense questions out here.

No comments:

Post a Comment

Note: only a member of this blog may post a comment.